|

CITS2002 Systems Programming |

CITS2002

CITS2002 |

CITS2002 schedule

CITS2002 schedule |

|||||

The Principle of Referential LocalityNumerous studies of the memory accesses of processes have observed that memory references cluster in certain parts of the program: over long periods, the centres of the clusters move, but over shorter periods, they are fairly static. For most types of programs, it is clear that:

CITS2002 Systems Programming, Lecture 14, p1, 12th September 2023.

Paging vs PartitioningWhen we compare paging with the much simpler technique of partitioning, we see two clear benefits:

If the above two characteristics are present, then it is not necessary for

all pages

of a process to be in memory at any one time

during its execution.

CITS2002 Systems Programming, Lecture 14, p2, 12th September 2023.

Advantages of PagingExecution of any process can continue provided that the instruction it next wants to execute, or the data location it next wants to access, is in physical memory. If not, the operating system must load the required memory from the swapping (or paging) space before execution can continue. However, the swapping space is generally on a slow device (a disk), so the paging I/O request forces the process to be Blocked until the required page of memory is available. In the interim, another process may be able to execute. Before we consider how we can achieve this, and introduce additional efficiency, consider what advantages are now introduced:

CITS2002 Systems Programming, Lecture 14, p3, 12th September 2023.

Virtual Memory and Resident Working SetsThe principle of referential locality again tells us that at any time, only a small subset of a process's instructions and data will be required. We define a process's set of pages in physical memory, as its resident (or working) memory set.prompt> ps aux USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 1 0.0 0.1 1372 432 ? S Sep12 0:04 init root 4 0.0 0.0 0 0 ? SW Sep12 0:04 [kswapd] root 692 0.0 0.2 1576 604 ? S Sep12 0:00 crond xfs 742 0.0 0.8 5212 2228 ? S Sep12 0:23 xfs -droppriv -da root 749 0.0 0.1 1344 340 tty1 S Sep12 0:00 /sbin/mingetty tt ... chris 3865 0.0 0.6 2924 1644 pts/1 S Sep15 0:01 -zsh chris 25366 0.0 6.0 23816 15428 ? S 14:34 0:06 /usr/bin/firefox chris 25388 0.0 1.4 17216 3660 ? S 14:34 0:00 (dns helper) chris 26233 0.0 0.2 2604 688 pts/1 R 19:11 0:00 ps aux In the steady state, the memory will be fully occupied by the working sets of the Ready and Running processes, but:

CITS2002 Systems Programming, Lecture 14, p4, 12th September 2023.

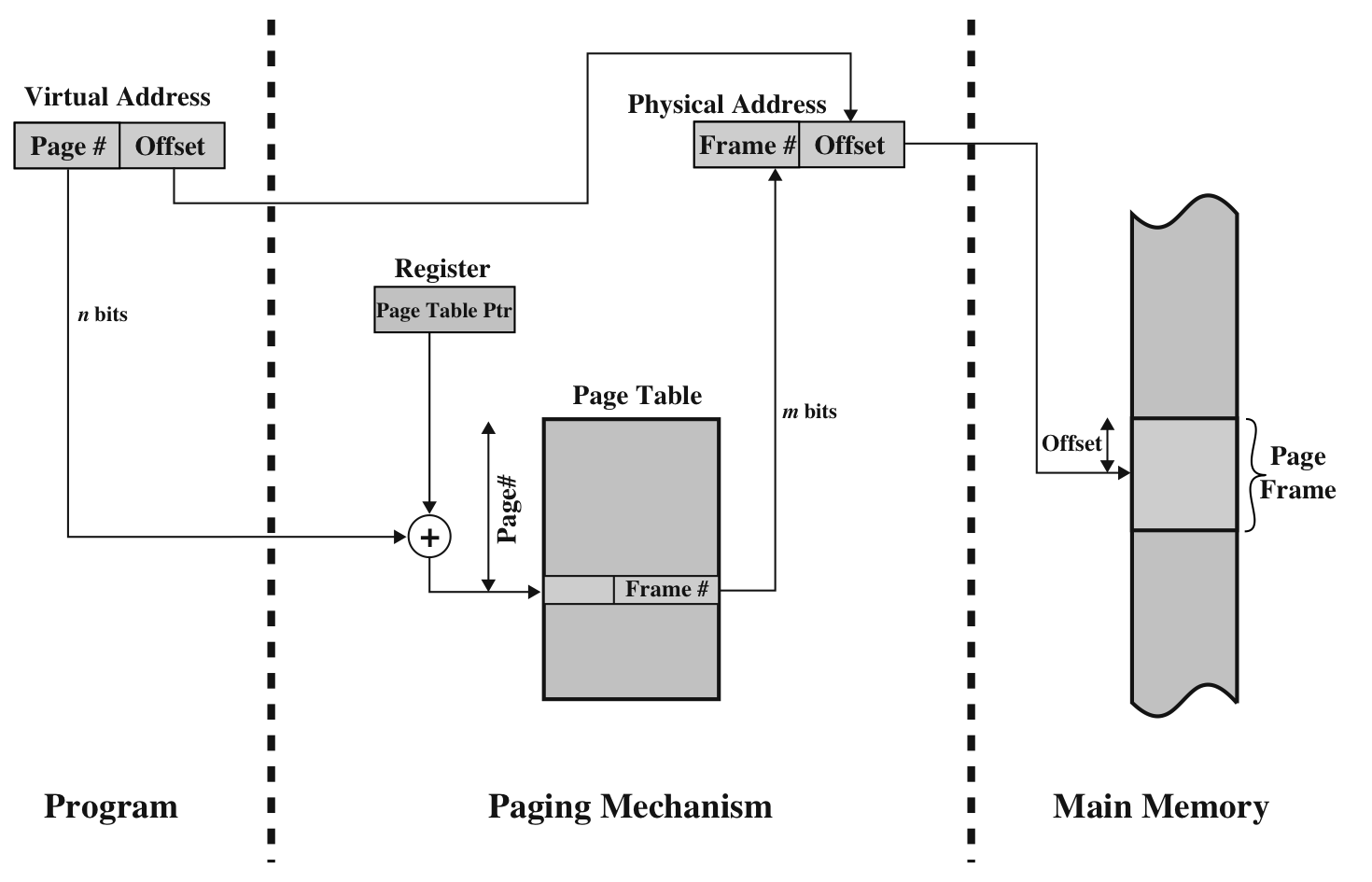

Virtual Memory Hardware using Page TablesWe saw that with simple paging, each process has its own page table entries. When a process's (complete) set of pages were loaded into memory, the current (hardware) page tables were saved and restored by the operating system. Using virtual memory, the same approach is taken, but the contents of the page tables becomes more complex. Page table entries must include additional control information, indicating at least:

Address Translation in a Paging System

CITS2002 Systems Programming, Lecture 14, p5, 12th September 2023.

Virtual Memory Page ReplacementWhen the Running process requests a page that is not in memory, a page fault results, and (if the memory is currently 'full') one of the frames currently in memory must be replaced by the required page. To make room for the required page, one or more existing pages must be "evicted" (to the swap space). Clearly, the working set of some process must be reduced. However, if a page is evicted just before it is required (again), it'll just need to be paged back in! If this continues, the activity of page thrashing is observed. We hope that the operating system can avoid thrashing with an intelligent choice of the page to discard.

CITS2002 Systems Programming, Lecture 14, p6, 12th September 2023.

Virtual Memory Implementation ConsiderationsThe many different implementations of virtual memory differ in their treatment of some common considerations:

CITS2002 Systems Programming, Lecture 14, p7, 12th September 2023.

Virtual Memory Implementation Considerations, continued

CITS2002 Systems Programming, Lecture 14, p8, 12th September 2023.

|